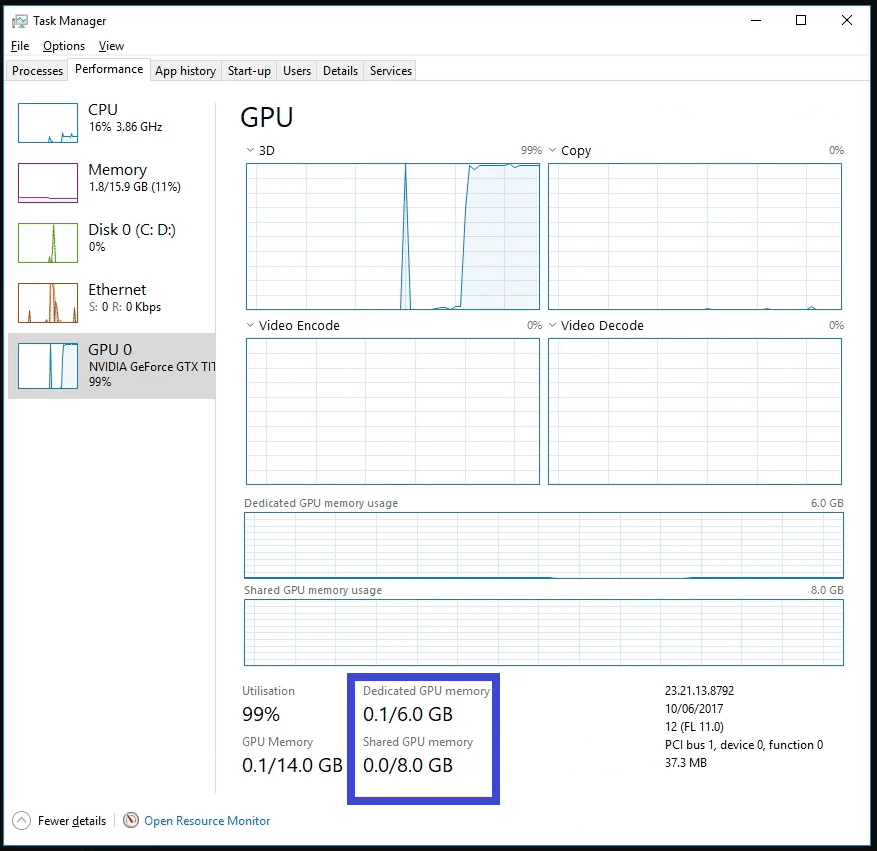

The system will use the shared GPU memory if the dedicated graphics memory is exhausted. This usually takes up half of the RAM that is present.

You might have heard about shared GPU memory if you’re a gamer or use graphics-intensive applications. But what exactly is it? And why is it important? In this article, we’ll explore shared graphics card memory and everything you need to know about it.

Are you someone who loves playing graphics-intensive games? Or do you use applications that require high-end graphics performance? If yes, then understanding shared GPU memory is crucial.

A Graphics Processing Unit (GPU) is a specialized processor designed to handle complex mathematical operations required for rendering images, videos, and animations. GPUs are critical in delivering a smooth and immersive experience regarding graphics-intensive applications.

One of the critical aspects of GPU architecture is its memory, which is responsible for storing and retrieving data required for rendering images. Generally, GPUs come with their memory, known as dedicated memory, which is separate from the computer’s main memory.

However, there are cases when the dedicated memory is insufficient, where shared GPU memory comes into play. Shared GPU memory refers to a portion of the computer’s main video memory allocated to the GPU for storing data required for rendering graphics.

Related Read

- What Is “Rendering” in Digital Art? [2D/3D]

- How to Increase Dedicated Video Ram (VRAM) of Your Graphics Card’s

- How Much VRAM Do You Need for Gaming and Video Editing?

How Does Shared GPU Memory Work?

When a graphics-intensive application runs, it requests the GPU to render the graphics by sending instructions and data required for rendering. The GPU retrieves the data from its dedicated memory and renders the graphics.

However, if the required data is unavailable in the dedicated memory, the GPU must retrieve it from the main memory. This is where shared GPU memory comes into play.

The GPU can use a portion of the main memory allocated to it to store the data required for rendering.

The portion of the main memory allocated to the GPU for storing data is called the shared memory. It is used for storing data that the GPU frequently accesses. The GPU can access this memory at a much faster rate compared to the main memory.

Advantages of Shared GPU Memory

One of the primary advantages of shared GPU memory is that it allows the GPU to access more memory than what is available in its dedicated memory. This is particularly useful for applications that require a lot of memory, such as high-resolution gaming or video editing.

Another advantage of shared GPU memory is that it enables better system memory utilization. Since the GPU can use a portion of the main memory, it frees up the dedicated memory for other applications. This results in better overall system performance.

Also, Read

- How To Choose A Graphics Card (GPU) for Video Editing?

- How To Use Two Graphics Cards? Multiple Graphics (video) Cards Tips

- How To Use Onboard Graphics And Video Card At The Same Time?

Disadvantages of Shared GPU Memory

While shared GPU memory offers several advantages, it has a few limitations. One of the most significant disadvantages is that it can lead to slower performance. Since the GPU has to retrieve data from the main memory, which is slower than its dedicated memory, it can result in slower rendering times.

Additionally, the amount of shared memory available to the GPU is limited. If an application requires more memory than what is available in the shared memory, it will lead to performance degradation.

Comparison of Dedicated and Shared Graphics Processing Unit Memory

In computer systems that use GPUs (graphics processing units) for gaming or machine learning tasks, there are two types of GPU memory: dedicated and shared.

Dedicated GPU memory refers to a portion of the computer’s system RAM (random access memory) explicitly reserved for the GPU. This memory is not accessible to the CPU (central processing unit) or other computer parts and is used exclusively by the GPU for its operations.

Dedicated GPU memory is typically faster and more efficient than shared memory.

Shared GPU memory, on the other hand, refers to a portion of the computer’s RAM shared between the CPU and the GPU. This memory is used by both the CPU and the GPU for their respective operations and is typically slower and less efficient than dedicated memory.

The choice between dedicated and shared GPU memory depends on the specific use case and the system’s requirements. In applications that require high-performance graphics processing, such as gaming or video rendering, dedicated GPU memory is usually preferred to ensure smooth and efficient performance.

In other cases, such as machine learning applications where the CPU and GPU work together in parallel, shared GPU memory may be sufficient and more cost-effective.

Does lowering or raising the amount of shared GPU memory makes sense?

A computer system’s optimal amount of shared GPU memory depends on the specific applications’ requirements.

If the system is running applications that require high-performance graphics processing, increasing the amount of shared GPU memory may be beneficial to ensure that the GPU has enough resources to handle the workload.

However, there is a limit to how much shared GPU memory can be allocated without affecting the performance of other system processes that rely on RAM.

Conversely, if the system runs applications that do not require a lot of graphics processing, decreasing the amount of shared GPU memory can free up more RAM for other system processes and improve overall system performance.

Ultimately, the decision to decrease or increase shared GPU memory should be based on the specific requirements of the applications being used and the resources available on the system. Finding the optimal amount of shared GPU memory that maximizes performance for the specific use case may require trial and error.

Conclusion

In summary, shared GPU memory is a portion of the computer’s main memory allocated to the GPU for storing data required for rendering graphics. It offers several advantages, such as better utilization of system memory and the ability to access more than what is available in the dedicated memory.

However, it also has limitations, such as slower performance and limited memory availability.

Understanding shared GPU memory is crucial to ensure optimal performance as a gamer or someone who uses graphics-intensive applications.

By knowing how shared GPU memory works and its advantages and disadvantages, you can make better decisions when choosing hardware and optimizing system performance.